blogstage - static web server in rust

I was looking for a useful service-oriented Rust project where I can test my project standards1 and dive deeper into the language. I decided to replace the “caddy file server” that serves this blog. The result is called blogstage: Simple web server providing my static blog to the world.

It can be used via blogstage <ip:port> <path> or just blogstage 0.0.0.0:80 ./blog/.

Contents

Requirements

The requirements were fairly simple, because the blog is static consisting of a flat directory of rendered HTML files along with some images2 and my reverse-proxy is handling the TLS stuff:

- bind to given address+port

- serve files from given directory (and prevent serving other directories e.g. via relative paths)

- serve text and binary for HTML and images

- not too easy to dive deeper into rust

How to serve

Since the requirements weren’t hard to fulfill, there are tons of crates that

can do the job. E.g. warp can do it in one line with something like warp::serve(warp::fs::dir("./blog")).run(([127, 0, 0, 1], 8080)). I decided against a ready-to-use crate, to make it a bit more difficult and educational.

web_server didn’t worked with dynamic strings, since routes need to be added with &'static str where I couldn’t figure out if and how this can be fixed3.

The solution was surprisingly easy to find. There is a tutorial about Building a Multithreaded Web Server in the official rust docs. What a coincidence!

Argument parser

Just two mandatory positional arguments without a default to keep things simple and explicit:

/* parse arguments */

let uri = match std::env::args().nth(1) {

Some(uri) => uri,

None => {

println!("usage: blogstage <URI> <PATH>");

return;

}

};

let path = match std::env::args().nth(2) {

Some(path) => path,

None => {

println!("usage: blogstage <URI> <PATH>");

return;

}

};

File cache

The blog is pretty small, and its container4 gets rebuilt for any change. Therefore I decided to load all the files non-recursively into a hash map with with basename as key and content as value.

/* load files */

let raw_entries = match fs::read_dir(path.clone()) {

Ok(entries) => entries,

Err(e) => {

println!("error reading files from {}: {}", path, e);

return;

}

};

let mut files = HashMap::new();

for entry in raw_entries {

if !entry.as_ref().unwrap().path().is_file() {

continue;

}

files.insert(

entry

.as_ref()

.unwrap()

.file_name()

.into_string()

.unwrap()

.clone(),

fs::read(entry.as_ref().unwrap().path().clone()).unwrap(),

);

}

TCP

Although doing TCP by myself felt a bit too low-level in the first place, it was quite pleasant with rust using only bind and an incoming iterator:

/* start server */

let listener = match TcpListener::bind(uri.clone()) {/* error handling */};

for stream in listener.incoming() {

match stream {

Ok(s) => on_request(stream, fileHashMap),

Err(e) => /* error handling */

}

}

HTTP

The request handler has a TCP stream such as the hash map containing all the files:

pub fn on_request(mut stream: TCPStream, files: HashMap<String, Vec<u8>>)

Then the request will get read until an empty line has been reached:

let reader = BufReader::new(&mut stream);

let request: Vec<_> = reader

.lines()

.map(|result| result.unwrap())

.take_while(|line| !line.is_empty())

.collect();

Parse the requested file quick ‘n’ dirty (see vision). Additionally index.html if the requested filename is empty for convenience:

let mut target: String = request[0].split(' ').collect::<Vec<&str>>()[1][1..].to_string();

if target.is_empty() {

target = "index.html".into()

}

In order to serve more complex files like images (and to be future ready if I add openscad or mp3 stuff), guess the mime type via the crate…well…mime_guess:

let mime = mime_guess::from_path(target.clone()).first().unwrap();

If the requested file can be found in the hashmap, write its content along with a valid HTTP header to the stream.

Otherwise just write a HTTP header with status code 404.

On any error, the response handler panics caused by the massive use of unwrap, and the thread just vanishes into the void (see vision):

match files.get(&target) {

Some(body) => {

let length = body.len();

println!("200 {}", target);

stream.write_all(

format!("HTTP/1.1 200 OK\r\nContent-Length: {length}\r\nContent-Type: {mime}\r\n\r\n")

.as_bytes()

).unwrap();

stream.write_all(body).unwrap();

}

None => {

println!("404 {}", target);

stream

.write_all("HTTP/1.1 404 NOT FOUND\r\n\r\n".as_bytes())

.unwrap();

}

};

Parallel request handling

Since I want to implement parallel request handling as simple as possible and don’t need to limit the amount of requests5, thread::spawn will do the trick:

for stream in listener.incoming() {

match stream {

Ok(s) => {

let f = files.clone();

thread::spawn(move || on_request(s, f));

}

Err(e) => {

println!("error accepting connection: {}", e);

continue;

}

}

}

The file map must be cloned, so its ownership can be moved to the thread.

Exit

It doesn’t deserve the predicate graceful, but it provides some simple mechanism to simply abort the whole request handling via the crate ctrlc:

ctrlc::set_handler(move || {

std::process::exit(0);

})

.unwrap();

Testing

My testing goal was to cover the on_request method via unit- and the CLI via integration-tests.

Unit test

To test the on_request method, the stream must be mocked. Looking closely what the function actually

does with the stream reveals that we actually only need to read from and write to it. As described in the tutorial,

TCPStream can be replaced by allowing any type that implements the Read and Write trait using impl Read+Write:

pub fn on_request(mut stream: impl Read + Write, files: HashMap<String, Vec<u8>>)

The mock implements all methods needed for properly read and write to it. I modified the tutorial’s mock to be single-threaded as we don’t use async:

use assert_cmd::prelude::*;

use predicates::prelude::*;

use std::cmp::min;

use std::collections::HashMap;

use std::io;

use std::io::{Read, Write};

use std::process::Command;

struct MockTcpStream {

read_data: Vec<u8>,

write_data: Vec<u8>,

}

// https://doc.rust-lang.org/std/io/trait.Read.html

impl Read for MockTcpStream {

fn read(self: &mut Self, buf: &mut [u8]) -> io::Result<usize> {

let size: usize = min(self.read_data.len(), buf.len());

buf[..size].copy_from_slice(&self.read_data[..size]);

Ok(size)

}

}

// https://doc.rust-lang.org/std/io/trait.Write.html

impl Write for MockTcpStream {

fn write(self: &mut Self, buf: &[u8]) -> io::Result<usize> {

self.write_data.extend(buf.iter().cloned());

Ok(buf.len())

}

fn flush(self: &mut Self) -> io::Result<()> {

Ok(())

}

}

Then tests can simply be written as such:

#[test]

fn serve_not_found() {

let input = b"GET /test.html HTTP/1.1\r\n\r\n";

let mut contents = vec![0u8; 1024];

contents[..input.len()].clone_from_slice(input);

let mut stream = MockTcpStream {

read_data: contents,

write_data: Vec::new(),

};

let files = HashMap::new();

blogstage::on_request(&mut stream, files);

let expected_response = format!("HTTP/1.1 404 NOT FOUND\r\n\r\n");

assert!(stream.write_data.starts_with(expected_response.as_bytes()));

}

Integration test

Each integration test case ensures that the binary behaves correctly with respect to the given environment and arguments. The following test checks if blogstage fails correctly when provided with no arguments:

#[test]

fn uri_is_missing() -> Result<(), Box<dyn std::error::Error>> {

let mut cmd = Command::cargo_bin("blogstage")?;

cmd.assert()

.success()

.stdout(predicate::str::contains("usage: blogstage <URI> <PATH>\n"));

Ok(())

}

- cases are based on the clip application test section from the official docs

- execution and asserting is done via the crate assert_cmd

- the binary still exits with success, since I haven’t added exit codes

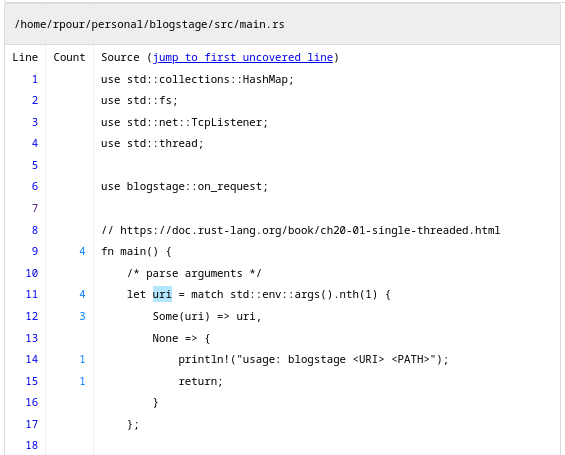

Coverage report

Coverage reports help to measure and observe how much of the code is actually covered. Based on those reports, it is easy to tell for which branches tests need to be made.

The coverage profile can be enabled via environment variables, as described in the docs

RUSTFLAGS: "-C instrument-coverage" LLVM_PROFILE_FILE: "cargo-test-%p-%m.profraw" cargo test

The llvm-coverage-tools provide rust-profdata and rust-cov to process the generated raw coverage data. All those *.profraw files can be merged via rust-profdata merge -sparse *.profraw -o coverage.profdata and converted to html using rust-cov show target/debug/blogstage -instr-profile=coverage.profdata --ignore-filename-regex=/.cargo --format=html --show-line-counts-or-regions > coverage/index.html

Unfortunately I didn’t got the unittest covered, although they all passed. The integration tests on the other side worked out-of-the-box.

Benchmark

If the files get cached in-memory and the service is tightly tailored around my use-case, you may ask Is it faster?

Well, a tiny bit. I used mildsunrise’s curl-benchmark with 100 requests and an insecure connection7:

caddy file serve:

DNS TCP SSL Request Content

Code lookup connect handshake sent TTFB download

min: 5.0 51.0 22.0 28.0 54.0 0.0

avg: 5.7 55.6 35.8 32.2 60.1 1.1

med: 5.0 55.0 36.0 32.0 60.0 1.0

max: 15.0 67.0 45.0 41.0 86.0 10.0

dev: 21.5% 5.5% 10.7% 8.1% 7.5% 106.1%

blogstage:

DNS TCP SSL Request Content

Code lookup connect handshake sent TTFB download

min: 5.0 51.0 26.0 28.0 58.0 0.0

avg: 5.4 55.9 34.9 32.3 68.3 0.7

med: 5.0 55.0 35.0 32.0 68.0 1.0

max: 7.0 68.0 46.0 41.0 77.0 2.0

dev: 12.3% 4.9% 11.2% 6.9% 5.9% 95.5%

Wrap it up

use std::collections::HashMap;

use std::fs;

use std::net::TcpListener;

use std::thread;

use blogstage::on_request;

// https://doc.rust-lang.org/book/ch20-01-single-threaded.html

fn main() {

/* parse arguments */

let uri = match std::env::args().nth(1) {

Some(uri) => uri,

None => {

println!("usage: blogstage <URI> <PATH>");

return;

}

};

let path = match std::env::args().nth(2) {

Some(path) => path,

None => {

println!("usage: blogstage <URI> <PATH>");

return;

}

};

/* load files */

let raw_entries = match fs::read_dir(path.clone()) {

Ok(entries) => entries,

Err(e) => {

println!("error reading files from {}: {}", path, e);

return;

}

};

let mut files = HashMap::new();

for entry in raw_entries {

if !entry.as_ref().unwrap().path().is_file() {

continue;

}

files.insert(

entry

.as_ref()

.unwrap()

.file_name()

.into_string()

.unwrap()

.clone(),

fs::read(entry.as_ref().unwrap().path().clone()).unwrap(),

);

}

/* start server */

let listener = match TcpListener::bind(uri.clone()) {

Ok(l) => l,

Err(e) => {

println!("error on binding to {}: {}", uri, e);

return;

}

};

// react to Ctrl+C

ctrlc::set_handler(move || {

std::process::exit(0);

})

.unwrap();

for stream in listener.incoming() {

match stream {

Ok(s) => {

let f = files.clone();

thread::spawn(move || on_request(s, f));

}

Err(e) => {

println!("error accepting connection: {}", e);

continue;

}

}

}

}

use std::collections::HashMap;

use std::io::{prelude::*, BufReader, Read, Write};

// We need to put everything out of main.rs what should be tested via integration tests:

// https://doc.rust-lang.org/book/ch11-03-test-organization.html#integration-tests-for-binary-crates

pub fn on_request(mut stream: impl Read + Write, files: HashMap<String, Vec<u8>>) {

let reader = BufReader::new(&mut stream);

let request: Vec<_> = reader

.lines()

.map(|result| result.unwrap())

.take_while(|line| !line.is_empty())

.collect();

let mut target: String = request[0].split(' ').collect::<Vec<&str>>()[1][1..].to_string();

if target.is_empty() {

target = "index.html".into()

}

let mime = mime_guess::from_path(target.clone()).first().unwrap();

match files.get(&target) {

Some(body) => {

let length = body.len();

println!("200 {}", target);

stream.write_all(

format!("HTTP/1.1 200 OK\r\nContent-Length: {length}\r\nContent-Type: {mime}\r\n\r\n")

.as_bytes()

).unwrap();

stream.write_all(body).unwrap();

}

None => {

println!("404 {}", target);

stream

.write_all("HTTP/1.1 404 NOT FOUND\r\n\r\n".as_bytes())

.unwrap();

}

};

}

Vision

- compression: add additional gzip compression

- refactor request parser: despite its bad readability, it e.g. fails if a filename contains spaces

- eliminate unwraps: explicit error handling instead of “resilience by accident”

- like testing, coverage, release, ci,…

- via blogctl

- see 70a9c21e

- For simplifying the operation effort, I build a container containing the whole blog. I replaced the caddy container with an alpine one.

- in comparison to the ThreadPool approach of the Multithreaded Server tutorial

- Those environment variables can also be used for

cargo buildto generate a coverage binary. - python curl-benchmark.py – -L -k evilcookie.de`, somehow the SSL handshake didn’t work…